From Direct Manipulation to Indirect Delegation: The AI Renaissance of the Command Line and the Future of AI OS

Modern AI, built on large language models, is creating a new kind of command-line interface (CLI). This agentic CLI blends the power of the old CLI with the ease of a graphical interface by adding natural language understanding.

It’s more than just a better tool—it marks a big shift in how people interact with machines. Users are no longer direct operators but become managers who delegate tasks to smart agents.

In the future, interaction will be fluid and multimodal, pointing toward the rise of the AI OS. With AI, the CLI gains new strength and becomes the main way to direct a digital workforce of agents.

1. A Quest to Kill Cognitive Load

The history of human-computer interaction (HCI) isn't a random series of inventions. It's a relentless, decades-long crusade with a single target: crushing cognitive distance. Think of it as cognitive load—the mental gymnastics you have to perform to translate your intent into something a machine can actually execute.

Every major paradigm shift, from punch cards to iPhones, has been driven by this one simple economic principle: make the machine adapt to the human, not the other way around.

When Humans Served the Machine

In the early days, talking to a computer meant bending to its will. The machine set the rules, creating an intimidating barrier that kept computing locked in the ivory towers of academia and corporate R&D.

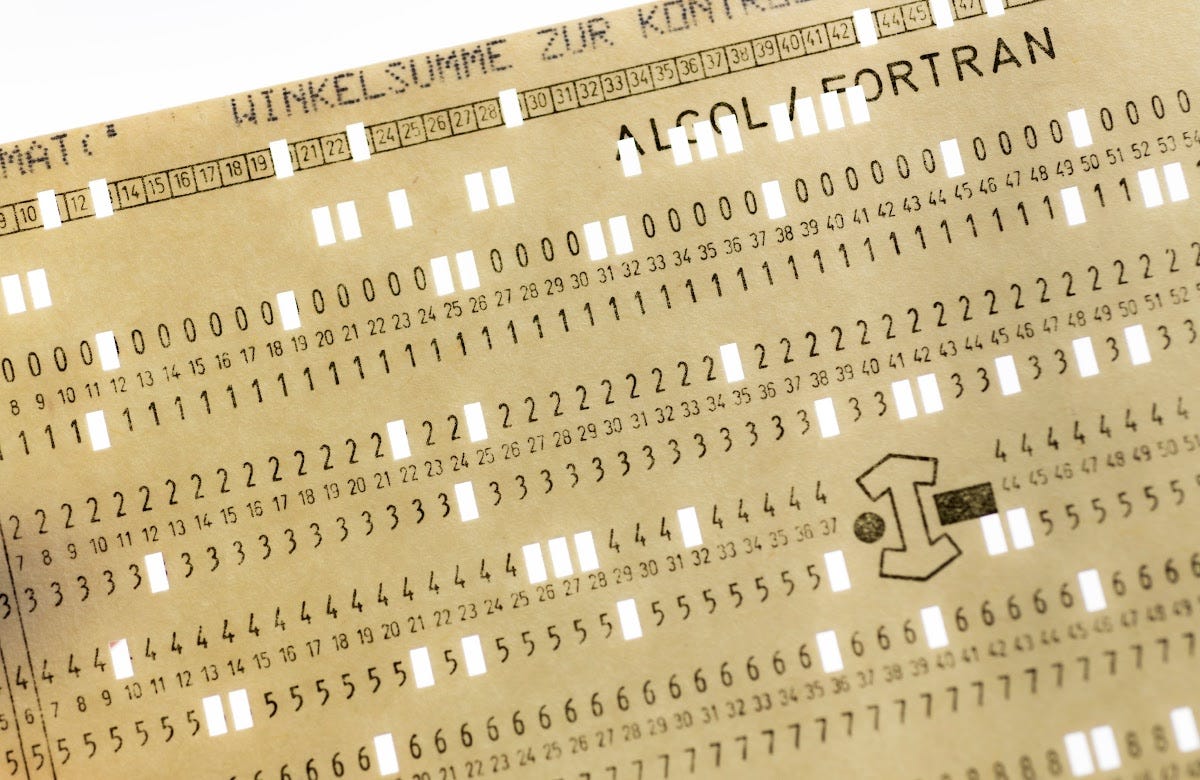

Batch Processing: This was the original interface, which was fundamentally non-interactive. You'd hand over a stack of punch cards, walk away, and pray that when you returned hours or days later, you’d have a result instead of an error. The cognitive and time gap between action and feedback was colossal. You had to mentally rehearse the entire program, because one tiny mistake meant starting all over. The cognitive load was crushing.

The Command-Line Interface (CLI) : The CLI was the first real leap toward a human-computer dialogue, but it was a conversation with a brutally strict grammarian. The CLI demanded that users learn and perfectly memorize a complex, unforgiving syntax. One typo, one misplaced flag, and your command was dead. This imposed a heavy intrinsic cognitive load—the difficulty baked into the task itself. While the CLI gave experts (programmers, sysadmins) unprecedented power, precision, and automation, its steep learning curve effectively locked out everyone else. In this world, the machine was in charge, and you had to learn its language.

The Visual Revolution

In the 1970s, Xerox's Palo Alto Research Center (PARC) became the epicenter of an HCI earthquake. Researchers there sparked a revolution built on a radical idea: flip the script and make the machine adapt to the human.

The Vision and the Breakthrough: Visionaries like Alan Kay knew the CLI was the bottleneck holding back personal computing. Their goal was to build computers for regular folks by transforming interaction from abstract lines of code into a visual, tangible world of metaphors.

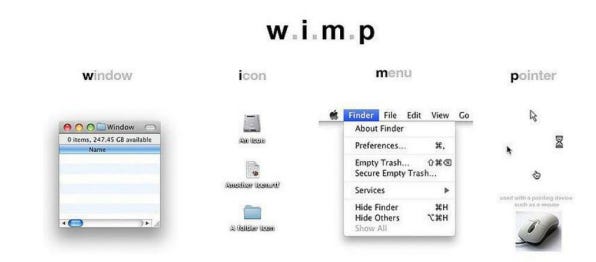

The result was the 1973 Xerox Alto, widely considered the first true personal computer. It was a beast, integrating a bit-mapped display, a three-button mouse, Ethernet, and a full-blown Graphical User Interface (GUI). The Alto's Smalltalk environment debuted the desktop metaphor, introducing the world to overlapping windows, icons, pop-up menus, and the pointer—the WIMP interface that still defines computing today.

In a now-legendary 1979 visit, Steve Jobs saw the Alto and had an epiphany: "all computers will work this way someday." That visit directly inspired Apple's Lisa and, ultimately, the Macintosh.

Apple's Mac and Microsoft's Windows finally brought the GUI to the masses. This was the great democratization of computing. Suddenly, you didn't need to memorize arcane commands; you could just point, click, and drag. The computer was no longer intimidating; it was inviting.

The Gospel of Direct Manipulation

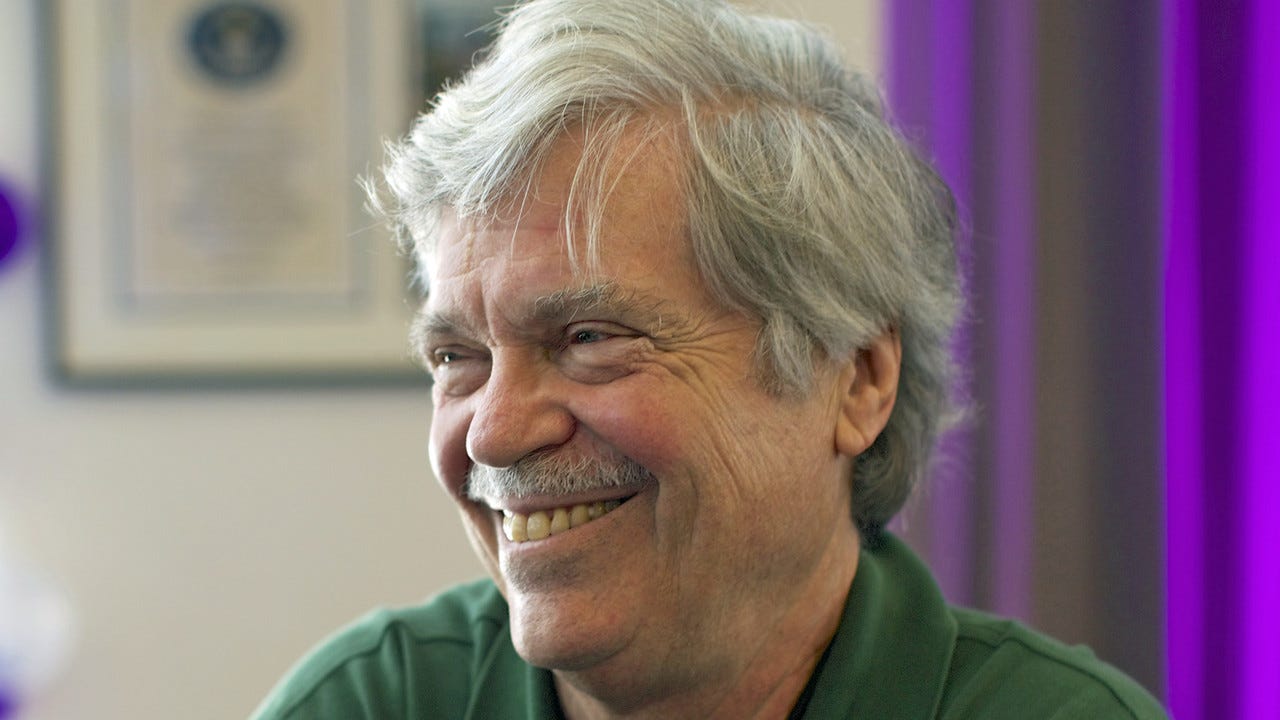

The GUI's triumph wasn't just about pretty icons. It was rooted in deep cognitive science. In the early '80s, HCI pioneer Ben Shneiderman articulated the theory of Direct Manipulation, nailing down the core logic behind the GUI's success.

The Core Principles: Shneiderman defined direct manipulation interfaces by three key features:

Continuous representation of the object of interest: The things you care about (files, folders) are always visible as icons.

Physical actions instead of complex syntax: You use physical-like actions (pointing, clicking, dragging) instead of typing commands.

Rapid, incremental, reversible operations with immediate feedback: Every action provides instant, visible feedback, making the interaction feel "direct" and forgiving.

Slashing Cognitive Load: Direct manipulation was revolutionary because it shifted the user's primary cognitive task from recall to recognition. With a CLI, you have to recall the exact command from memory. With a GUI, you just have to recognize the right icon or menu item. This dramatically lowered the mental barrier, making the interface transparent and allowing users to focus on their task, not on the tool. This is why GUIs feel intuitive.

Beyond the Desktop

The post-GUI era has simply continued this march toward more natural, lower-friction interaction.

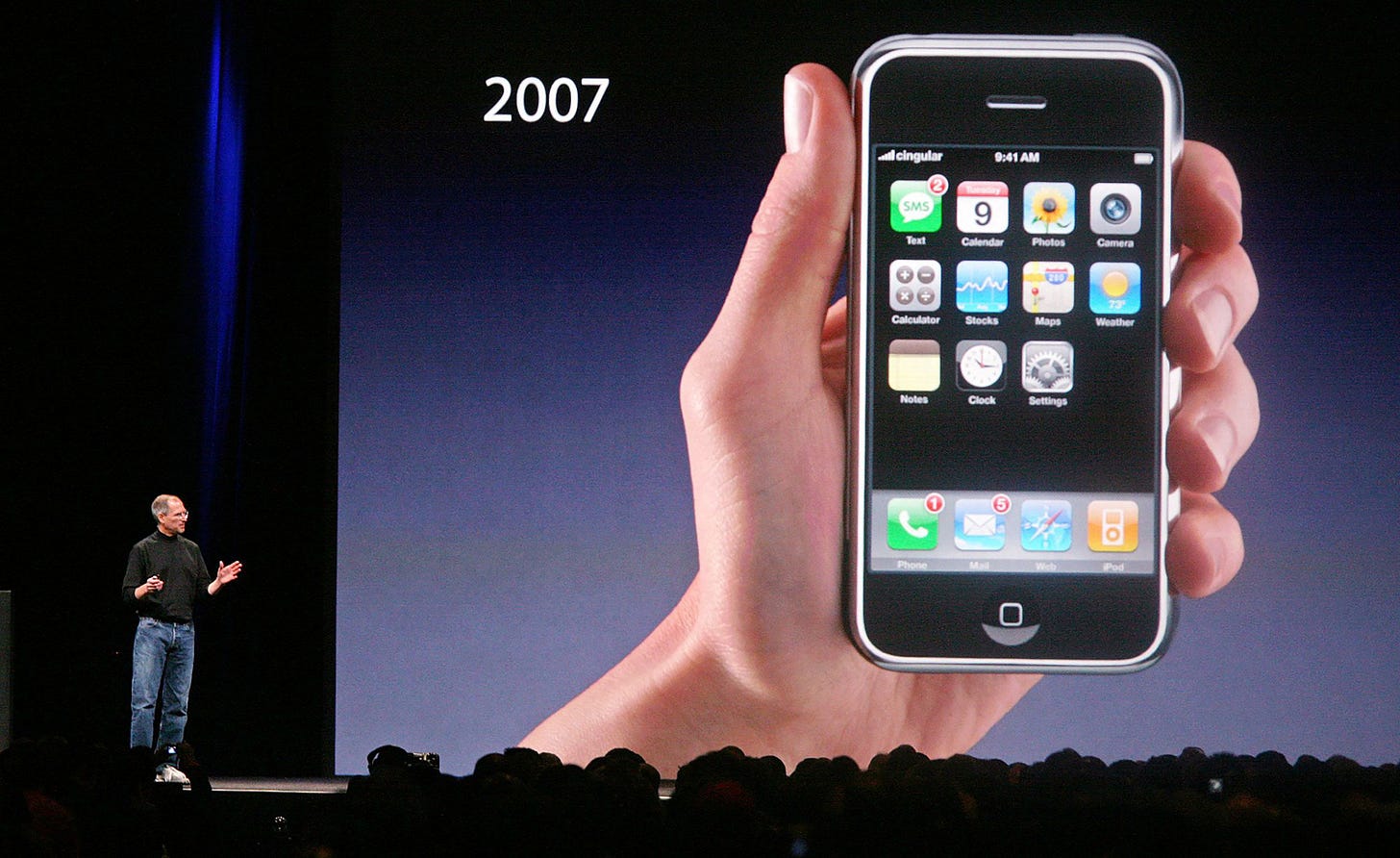

Touch & Gestures: The 2007 iPhone launch revolutionized mobile HCI with a mature multi-touch interface. Gestures like pinch-to-zoom and swiping are the logical extension of direct manipulation, making interaction even more physical and instinctive.

Voice & Conversation: The rise of assistants like Siri and Alexa represents the next step toward the ultimate natural interface: language. The goal is to let users communicate with machines through the most human channel of all, ideally eliminating traditional interfaces entirely. This trend is built on decades of research in Natural Language Processing (NLP), from early prototypes like ELIZA in the 1960s to today's powerful models.

The history of HCI reveals one clear throughline: a relentless drive to lower the communication cost between human and machine. But this created a fundamental tension between power (the scriptability and automation of the CLI) and ease of use (the intuitiveness of the GUI). Resolving this tension is the next great challenge for HCI.

2. The New Command Line: Where Power Meets Language

We are now witnessing a full-blown renaissance of the command line. But this isn't a throwback. It's a profound evolution. Modern AI, powered by Large Language Models (LLMs), is birthing a new CLI paradigm that finally resolves the historic tension between power and ease of use by injecting natural language understanding directly into the powerful, programmable environment of the terminal.

From Syntactic to Semantic

The traditional CLI is syntactic. It understands a rigid, predefined grammar. The user must perfectly translate their intent into that syntax. One typo, and it's game over. The cognitive burden is immense.

The new agentic CLI is semantic. It uses an LLM to understand the intent behind a user's natural language. The LLM acts as a real-time universal translator, taking a high-level, fuzzy goal (e.g., "Refactor this code to be more efficient") and automatically breaking it down into a sequence of precise, machine-executable commands or code changes. This slashes the cognitive load without sacrificing any of the CLI's raw power. You no longer need to memorize syntax; you just need to articulate your goal.

The New CLI Tooling

The top AI players are already shipping products that embody this new paradigm. While all are CLI-based, they represent different philosophies and strategic plays for the future of development.

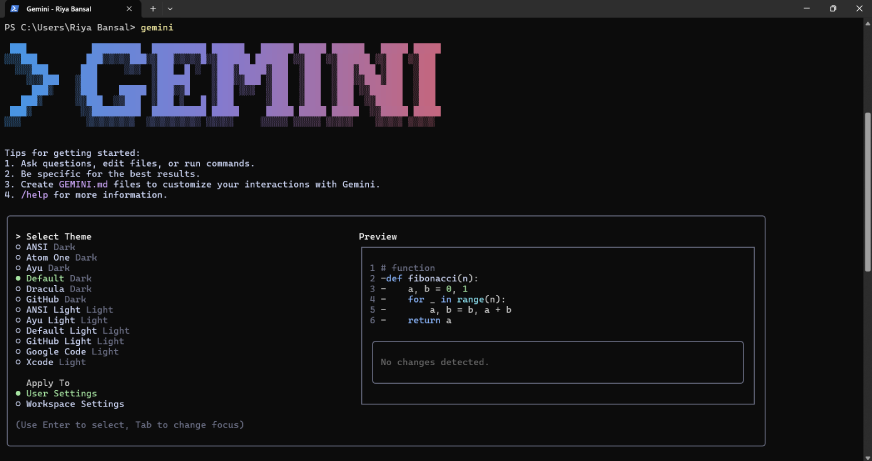

Google is positioning the Gemini CLI as an open-source AI agent that lives in the terminal. It’s designed as a versatile utility for more than just coding, extending to deep research, content generation, and task management. Gemini CLI offers a highly interactive experience. A simple npx command drops you into a session where you can use the @ symbol to easily load local files as context, enabling a continuous conversation with the AI inside the terminal.

Gemini CLI embodies the AI-supercharged shell model. It's not trying to replace the developer's local environment but to massively augment it, making the terminal itself smarter and more powerful than ever before.

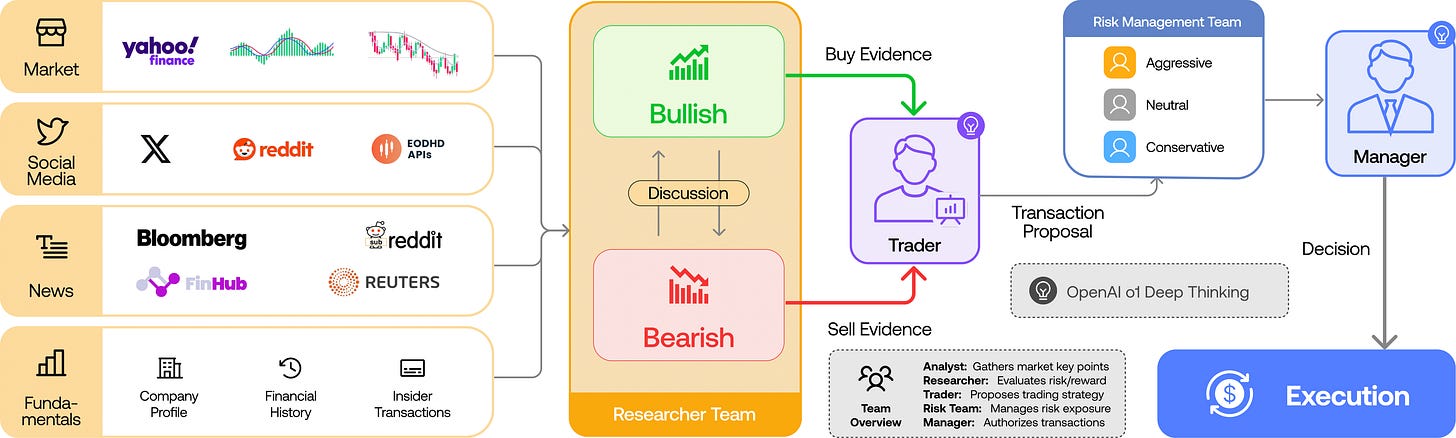

The potential of the agentic CLI goes beyond assisting a single developer. It can orchestrate entire teams of virtual experts to solve complex, domain-specific problems. The TradingAgents is an audacious experiment that simulates an entire financial trading firm using multiple specialized LLM agents. This virtual firm includes a fundamental analyst for earnings reports, a sentiment analyst for social media, a technical analyst for chart patterns, bull/bear researchers for debate, a trader for execution, and a risk management team.

The system uses a hybrid model. Agents pass structured reports to each other for accuracy, but at key decision points, they engage in natural language debate to stimulate deeper reasoning. This entire complex simulation is launched, configured, and monitored from a CLI.

TradingAgents showcases the ultimate potential of the agentic CLI paradigm: moving beyond a human-to-agent dialogue to a human-orchestrating-an-agent-society model. It proves the CLI can serve as the command center for complex, collaborative AI systems in highly specialized fields.

3. The Emerging Paradigm: From Direct Manipulation to Indirect Delegation

The rise of these new CLI tools signals a fundamental shift in the human-computer relationship. We are moving from an era where the user is a direct operator to one where the user is a manager, indirectly delegating tasks to autonomous agents. The theoretical groundwork for this shift was laid more than 20 years ago in a legendary debate.

Revisiting the Shneiderman-Maes Debate

In 1997, at a top HCI conference, two titans of the field—Ben Shneiderman, champion of direct manipulation, and Pattie Maes, pioneer of intelligent agents—held a historic debate that perfectly predicted our current paradigm clash.

Shneiderman's Stance: Control and Predictability. Shneiderman argued that users crave control and predictability. They want to be in the driver's seat, knowing exactly what their actions will do. Direct manipulation, by making the interface transparent and results immediate, perfectly serves this need. He worried that black box agents would be unpredictable, robbing users of their sense of control.

Maes's Stance: Delegation and Cognitive Unloading. Maes countered with a visionary argument: as information and task complexity explode, users will be overwhelmed. Directly manipulating every detail will become impractical. Therefore, users will need to delegate tasks to intelligent assistants that can learn their preferences and autonomously handle complex, multi-step processes, freeing them from cognitive overload.

The CLI renaissance is the real-world echo of this debate. The new agentic CLIs are the interface agents Maes envisioned. And their core design challenges—ensuring user control, providing verifiable outputs, and requiring explicit approval for critical actions—are precisely the concerns Shneiderman raised. We haven't abandoned direct manipulation; we are integrating its philosophy of control with the efficiency of indirect delegation at a higher level of abstraction.

The CLI as the Premier Agentic Runtime

It seems paradoxical that this futuristic interaction model is being carried by the retro command line. But it's no accident. The CLI is the ideal ecosystem for this new paradigm of indirect delegation—it is the perfect Agentic Runtime.

Efficiency & Low Overhead GUIs are resource hogs, consuming memory and CPU to render rich visuals. The CLI is incredibly lightweight. When you're running complex AI models or orchestrating multiple agents, this efficiency is critical.

Scriptability & Composability The core philosophy of the Unix CLI is to solve big problems by piping together small, single-purpose tools. This "composability" is a perfect match for agentic workflows. A developer can easily pipe the output of one agent (e.g., a list of files to analyze) into another (e.g., a code refactoring agent), creating powerful automated chains. This kind of flexible, on-the-fly orchestration is nearly impossible in a standard GUI.

Text as the Universal Interface The native currency of LLMs and agents is text. They take text prompts and produce text output (code, logs, reports). As a text-native environment, the CLI is the most direct, lowest-friction medium for communication between humans and agents, and between agents themselves.

The Transformation of Work

This shift from direct manipulation to indirect delegation is profoundly reshaping how we work, especially in software development.

From Coder to Reviewer: The developer's role is changing. Less time is spent on mechanical work like writing boilerplate code and more time is spent on high-level systems design, goal definition, and reviewing the output of AI agents. GitHub reports that its AI assistant, Copilot, is already writing a significant percentage of code, dramatically boosting developer velocity.

Conversational Development (vibe coding): The software development lifecycle is becoming a series of "conversations." It's no longer a waterfall of discrete stages but a fluid, interactive process with an agent: the developer states a goal, the agent proposes a plan, the developer reviews and agrees, the agent codes and runs tests, the developer reviews again, and the agent submits for deployment. The entire process is a single, coherent narrative threaded through a conversation.

The Agent Ecosystem: The endgame of this trend is multi-agent collaboration. In such a system, different agents will play specialized roles—planner, coder, tester, reviewer—working together to complete a complex software project. It's a structure that mimics human teamwork but operates at machine speed and scale.

The rise of the agentic CLI is not a simple tool replacement; it's a paradigm shift in human-computer collaboration. The user is evolving from a hands-on "artisan" to a strategic "project manager." This shift is happening in the CLI because its inherent advantages in efficiency, automation, and composability make it the optimal platform for managing this new digital workforce of AI agents. GUIs were designed to manipulate one object at a time; CLIs were born to command many actors at once.

4. The Trajectory and Strategic Takeaways

By mapping this history and analyzing the new paradigm, we can chart the future trajectory of HCI and offer forward-looking insights for tech leaders.

The Rise of the AI Operating System

What we're calling an Agentic Runtime today is the blueprint for a new kind of operating system. A traditional OS (Windows, macOS, Linux) manages physical resources like CPU, memory, and files. The future AI OS will manage goals and workflows.

Orchestration as a Core Function: This AI OS won't just run siloed apps. It will orchestrate a swarm of specialized agents—some from the platform provider, some from third-party developers, some built in-house. A user will issue a high-level business command like Launch the Q3 marketing campaign, and the AI OS will automatically invoke and coordinate agents for creative generation, budget approval, ad placement, and performance analysis to achieve that complex, cross-functional goal.

The CLI as the Agent Shell: For developers and power users, the primary interface for configuring, managing, and debugging this AI OS will be an advanced, conversational command-line shell. This agent shell will be the main gateway to controlling the entire agent ecosystem.

The Future of the Interface: A Fluid, Multi-Modal Hybrid

The future isn't a zero-sum battle between the CLI and the GUI. It's a blurring of their boundaries into a fluid, context-aware, multi-modal experience.

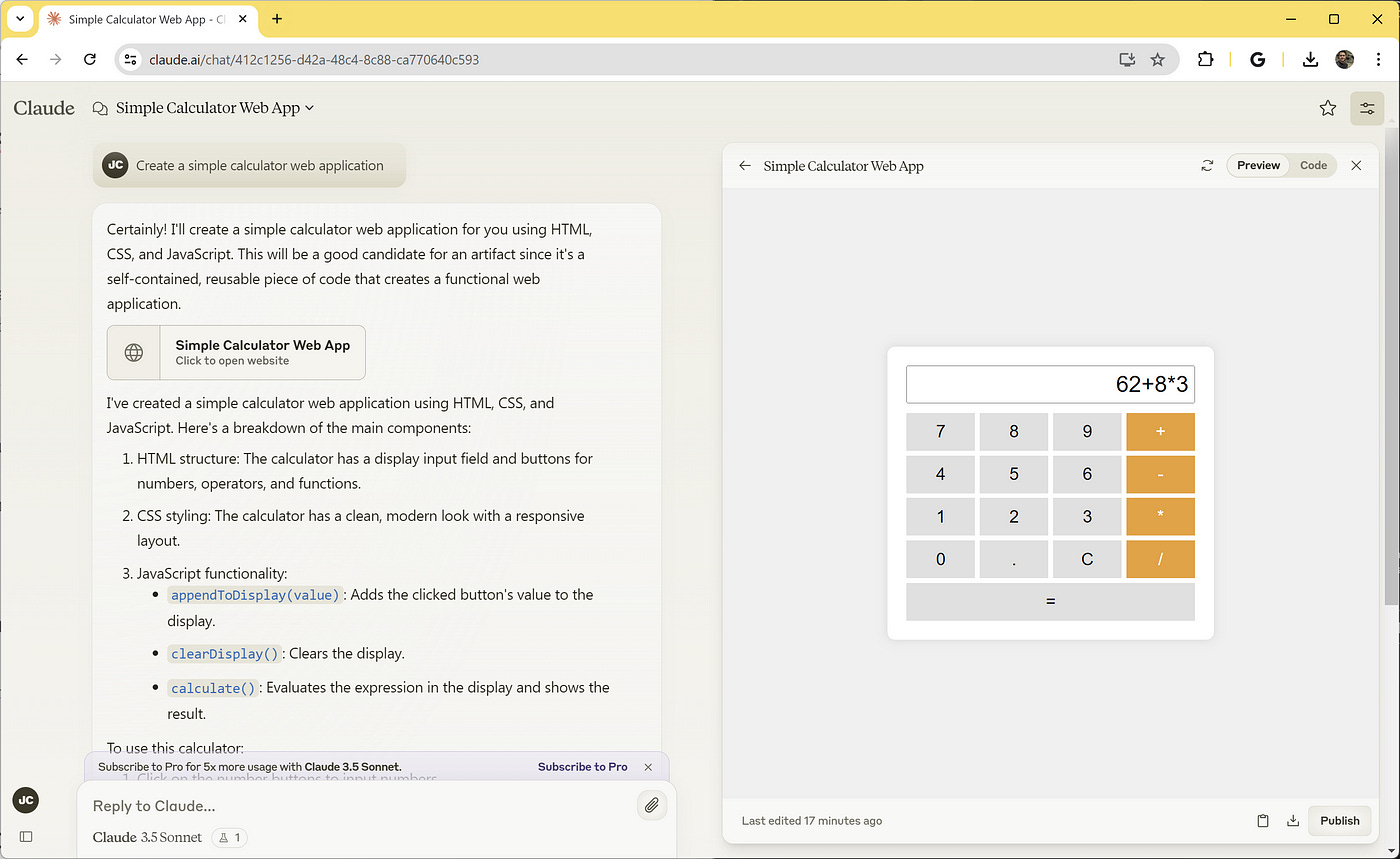

The Right Interface for the Job: A typical workflow might look like this: a user initiates a complex task in a CLI-like environment with natural language (Generate a landing page for our new product based on this sketch). After understanding the intent, the agent might generate not only the code but also a temporary, interactive GUI (like the Artifacts in Claude) that allows the user to directly manipulate the result—dragging to reposition an image, clicking to edit text. In this phase, conversation becomes the secondary tool for modification, while direct manipulation takes center stage for fine-tuning.

From WIMP to CANS: The dominant interaction model will evolve from WIMP (Windows, Icons, Menus, Pointer) to something we might call CANS: Conversational, Agentic, Nuanced, and Synthesized. Here, the core interaction is no longer with static icons but a dynamic, goal-oriented collaboration with a system that can hold a conversation, acts with agency, understands nuance and context, and can synthesize information from multiple sources to get the job done.

Key Takeaways

Based on this analysis, decision-makers in tech need to re-evaluate their product strategy and R&D priorities to align with the coming paradigm shift.

Shift from Tool-Centric to Workflow-Centric Design. The competitive advantage of the future won't be in designing the most beautiful UI for a single task, but in automating and optimizing an entire end-to-end workflow. Stop designing apps and start designing agent systems. The value is in solving the whole problem chain.

Treat the CLI as a First-Class Citizen for Power Users. Recognize that in complex professional domains (development, finance, science), the agentic CLI is becoming the new power-user interface. Invest in building powerful, scriptable, and developer-friendly CLI tools and agent frameworks for your platform.

Focus on Orchestration and Interoperability. The dominant platforms of the future will be those that can effectively orchestrate agents from different sources. The strategic focus should be on building open, extensible systems. Adopting standards that allow third-party agents and tools to plug in seamlessly will be key to building a powerful ecosystem.

Redefine Usability for the Agentic Era. The meaning of usability is expanding. For a GUI, it meant low cognitive load. For an agent, it must also include predictability, controllability, and verifiability. Users must be able to trust the agent, understand its reasoning, and intervene when necessary. Investing in features like clear logging, action previews, and explicit approval models is now just as important as the core AI capability.

Prepare Your Workforce for Conversational Development. The skills required for software development are evolving. Proficiency in prompt engineering, agent orchestration, and high-level systems design will become as important as traditional coding. Start training your teams to work collaboratively with AI agents, fostering talent that can clearly define problems, evaluate AI output, and provide strategic direction.

The renaissance of the agentic CLI heralds the dawn of a new era of computing. It's an era where the nature of interaction shifts from operation to delegation, and the core of computing shifts from managing files to orchestrating tasks. The companies that understand and adapt to this fundamental change first will be the ones that lead the next wave of technology.

Note: This article came from Gemini Deep Research, but I read the whole piece carefully, made edits, and added illustrations. Any mistakes are due to the limits of my own knowledge of HCI.